Genetic Algorithms

Having gone to a catholic highschool, my freshman year I was required to take a call called “Catholic Identity”, which basically when over what it meant to be a catholic, how that differed from other Christian religions. I had a particularly zealous and devout teacher, who taught a lesson on creationism vs evolution. He fully believed in creationism, and when he argued against evolution, it was very clear he had no idea how evolution supposedly worked.

His demonstration for that lesson was to take a piece of paper, and he called it beautiful. The edges were nice and clean, the corners were square, it was without a doubt order. He then tears it up into dozens of pieces and throws them up into the air. When the land on the ground, he says “that is chaos, there is no order. Evolutionists believe that we got order from chaos.”. As the time my debate team teenage mind immediately picked a fight saying that we don’t know that humans are order or beautiful since beauty is in the mind of the beholder. I also pointed out that if we tossed that if square foot on every habitable planet dropped a piece of torn up piece of paper, for every second for the 13 billion or so years since the big bang, we would probably find “order” once or twice; which is all we know we have found “order” for since we are the only planet known to us to have life.

At this point he moved on. I was well versed at the time of the argument against creationism; however at the time, I was not as well versed in the power of evolution. I didn’t think much of again for another 7 years or so.

Inspiration

Fast forwarding through college, working in R&D at a factory, and my first few jobs in software, I came across this video on the NEAT algorithm. NEAT stands for NeuroEvolution of Augmenting Topologies. The video goes through an experiment where the game a dumb neural network the inputs and outputs into a game of mario, and “bred” a super ai that could beat that level.

Essentially, it randomly generated an neural net connecting blocks on the screen relative to Mario, fed it through randomly generated hidden layer nodes, and connected them to the output nodes, or the inputs into the video game on the controller. Most of the “species” would randomly play the level and be terrible at it. Each species was graded on how far into the level it got. The most “fit” from each generation were “bred” together to form a new generation. Each new generation would also have some portion of random mutations.

Over many generations, the species with the traits that helped maximize fitness became more common, raising the max fitness for each generation and slowly moving the average fitness. Over time, the breeding program produces a neural net that can beat that level of mario.

I thought that was super cool. A program that could solve any arbitrary problem that has inputs and outputs and a means of measuring fitness. However at the time, the thing I grabbed onto was Neural Networks. Programming modeling the brain was hot in tech, and with courses like fast.ai, I had a means of getting at learning them.

Returning to Genetic Algorithms

Fast forward another few years, any my abandoning Neural networks for lack of large data sets and computing power to train deep learning convoluted neural nets; Youtube recommended me the Code Bullet channel, which has demos of NEAT and other algorithms playing games. This resparked my interest in genetic algorithms and I set out to play around with some, even without the neural networks.

Related Tangent: Battleship Space

On a soon to be related note that helps provide some context, in mid 2015 I attempted to build one of my dream games; A multi-player on a single ship. space shooter. The true idea was an amalgamation of Mass Effect 2’s starship scenes where you had different characters manning different stations; FTL where different weapons on a ship as well as energy management between systems and shields; all of the spaces scenes from Stargate, and almost the entirety of Star Trek. As a side note, my dream game as come out on the Oculus in the form of Star Trek Bridge Crew, but prior to that, I tried writing a 2D version of the game in PhaserJS.

Some Previews of the Game I ended up with:

I wanted the game to have “realistic” physics, so when you stop accelerating you continue moving. It certainly made piloting difficult enough that it was a full time job on its own. However the way I ended up doing the physics engine made it difficult to keep two browsers in sync over a network so I ended up scrapping the multiplayer portion of the game. Instead I opted to write levels of swarms of AI’s to fight.

Unfortunately, writing an AI to abide by my realistic laws of physics was harder than anticipated, so it ended up being : find ship -> move into weapons range -> turn to ship -> fire weapons -> repeat. Which made the only hard part of the game was when you had too many AI’s to face.

Building Out A Simple Genetic Algorithm

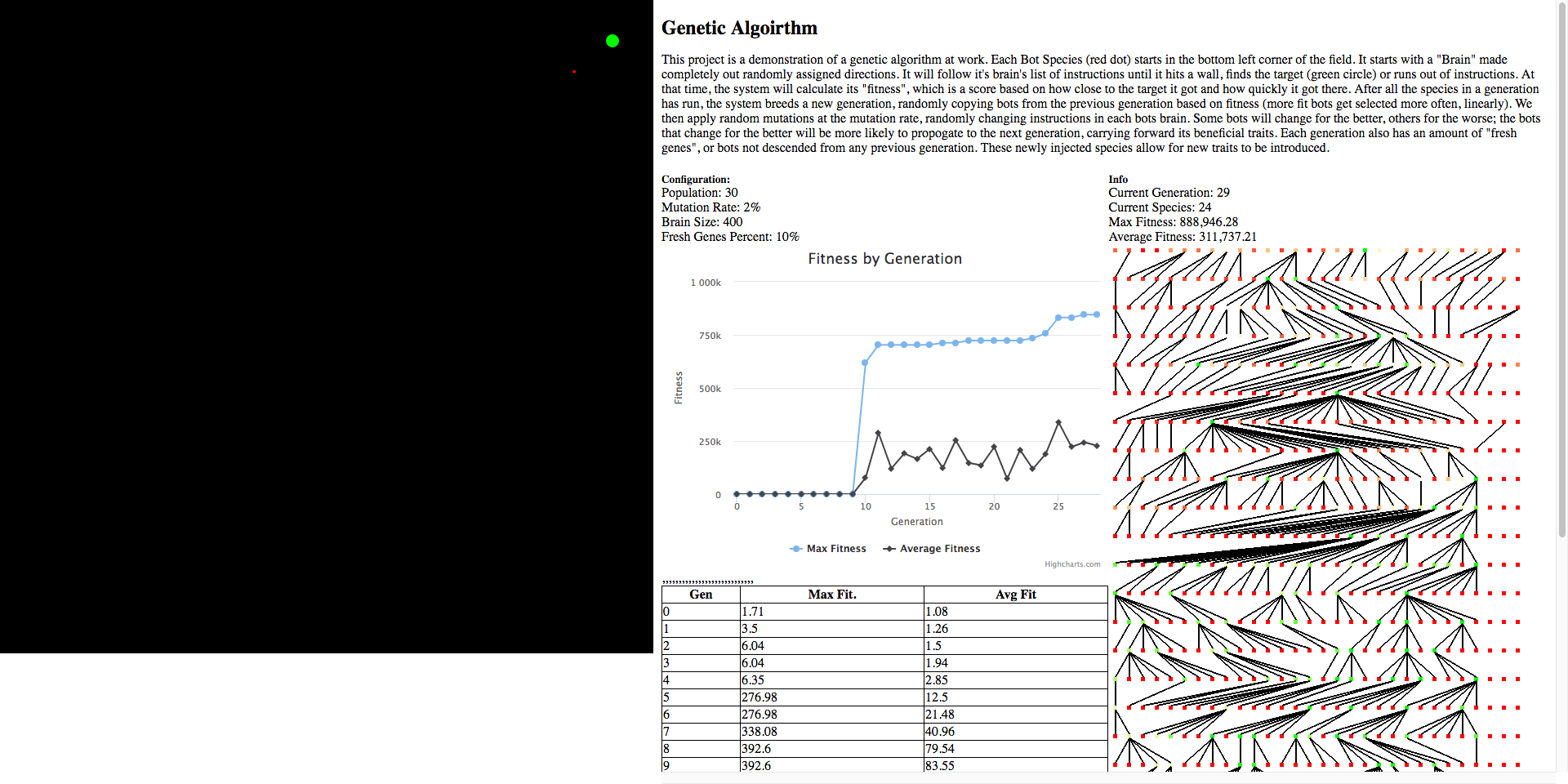

So following the overview written in processing, I set out to build a similar AI in Typescript and PhaserJS. I chose PhaserJS because my game was written in it and I knew things like collisions and distances calculations were built in. Writing in Typescript helps keep things straight and bug free. The major change I made was to test 1 species at a time, as opposed to an entire generation at a time. This was in preparation of training more advanced AI’s that couldnt be tested in parallel.

Initially I tried writing the system from intiution after writing the video. The steps are pretty simple:

- Create a generation of randomly generated Bots with brains that consist of random directions.

- Load A bot into the game

- Let the bot follow its brains instructions until completion

- Grade the End location via a fitness function that increases the closer you get.

- Repeat steps 1-3 until generation is fully measure for fitness.

- Find Total Fitness

- Randomly Select Next Generation based on the ratio of their fitnesses

- Randomly Mutate the Brains of the new Generation

- Repeat steps 4-7 Forever

I made certain key numbers controls that were configurable. Things such as the starting position of a bot, the target position, the Generation Population size, the Mutation rate of a brain in each new generation, How big each brain was. I also added a “Fresh Genes Percent” where some portion of each generation would be randomly generated from scratch. This allows for the chance that an outside species could so happen to be better than the bred version, allowing for new genes to be introduced into the gene pool.

After getting everything working, I started iterating, adding a chart and table for fitness over time, then a genealogy tree so to show the origins of species and allow observers to visualize the changes in health and how that affected the gene pool.

The end results is can be found here: https://mc706.github.io/phaser-genetic-intro

For the Lazy

Lesson Learned

Genetic Algorithms Take Time. In this example, small mutations in the bots brain greatly affects the total fitness. (randomly mutating a direction early in the instructions list can have the bot end up in a wrong corner).

Fitness algorithms need steep gradients. Initially I had my intuitive function for fitness to be 1 / distance to target at death. This resulted in the difference between a good and an OK run being not that great, which meant that improvements were very slow. Changing the fitness to be 1 / distance ** 2 made small improvements in distance equate to large increases it fitness. This meant that it much more strongly favored improvements when selecting a next generation.

Using a Step Function for Fitness Helps For Optimizing more than one thing. The other thing my Intuitive fitness function got wrong was taking into account the number of steps take, the less the better. This inadvertently caused the bots who died by running directly into the wall to have better fitness. By make the step function where the numbers of steps was only taken into account if a Bot reached the target, this allowed the optimizations to occur in order.

Always Room for Improvement. I left one of simulations running over night and found that while over time it stalled in improving for periods, it always found a better way. Then out of nowhere, the were be a huge leap in fitness, followed by another plateau. I have yet to calculate the theoretical max fitness, however

Things to Experiment With

I have yet to see if population size has any really meaningful impact on the learning rate based when doing it 1 species at a time. Since it is the generation boundary that does the mutations, when doing things 1 at a time, doing 10 generations of 500 or 100 generations of 50 should theoretically be similar. If anything, the 100 generations of 50 might improve faster.

I am super interested in how doing species analysis and categorizing types. I remember the MarI/O video mentions breaking down bred algorithms into species and genera, and I was surprised to see the emergence of these patterns in the genealogy graph. There would be large jump in fitness and a species would dominate, then its decendates would be even, so a lot more speices would emerge, then a few species would complete for dominance again.

Next Steps

So I am going to try to fold some of the knowledge I learned in fast.ai about neural networks back into this genetic algorithm and try breeding neural networks. Given the basic setup I have, I can also try to write some more browser games and integrate the NEAT algorithm into them.

Eventually, once I have a strong handle on NEAT, I would like to try to build the AI for battship space to be a NEAT evolved net, and see how hard the game gets.